The Past Has a Way of Catching up with You, or

The Democratisation of Computer Music,

10 Years On.

Warren Burt

Summary :

1. Introduction – The 2013 International Computer Music Conference

2. 1967-1975: SUNY Albany and UCSD

3. 1975-1981: Moving to Australia, Plastic Platypus

4. 1981-75: Low cost Single-board Microcomputer

5. 1985-2000: Incresed Accessibility

6. Post-2000 Period: Commuter Train Work and Brain Work on Computer

7. Today: Technological Music Uptopia and Irrelevant Musicians

8. Conclusion

1. Introduction – The 2013 International Computer Music Conference

Back in 2013, the International Computer Music Conference was held in Perth Australia. It was organised by a team led by Cat Hope,[1] and they kindly invited me to give one of the keynote addresses. My topic was the “democratisation” of “computer music.” I put the words in inverted commas because both terms were, and are, points of contention, although perhaps the meanings of those terms have changed, maybe quite a lot, in the past decade. My talk was given from an Australian perspective because that’s where I’ve mostly lived for the past 47 years. With an international audience at the conference, I wanted to give them a quick introduction to the somewhat unique context the conference was occurring in. Australia has, in some ways, a very different cultural context than Europe, or North America, among other places, and in some ways a context that is very similar to those places. I remember Chris Mann, poet and composer, back in 1975, picking me up at the airport on my first arrival in Australia saying, “OK, ground rules: We speak the same language, but it’s not the same language.” My experiences over the next few years were to show me, in exquisite detail, the many nuances of difference that existed between Australian English and the Englishes from the rest of the world. And in the way of things, the Australian English of a half-century ago is not the Australian English of today. I’ve probably become too acclimatised to the language after all that time, but many of the unique characteristics of Australian English I noticed back then have disappeared with the passing of time.

My point back then was two-fold: first, that advances in technology were making the tools of what we called “computer music” more accessible to lots of people, and that second, the definition of what was considered “computer music” was itself changing. In 2013, I mentioned Susan Frykberg’s,[2] asking me if I was talking about “democratisation” or “commercialisation?” This seemed like a relevant point to bring up at the time. Since then, the proliferation of cell phones and other hand-held digital technology has made her original point, if not moot, at least less keen than it was. With “the world” now thoroughly united by miniature communications hardware, it seems that the technology has become neither democratised nor commercialised, (or both democratised and thoroughly commercialised) but simply ubiquitous, our continuous cultural environment. I was just asked by Richard Letts, the editor of Loudmouth, a music ezine, to write an article about the current state of music technology. To show how advanced music technology had spread everywhere, I focused my article on what music technology was available for the iPhone, showing that most of the sophisticated music technology applications of the past were now available, to some extent, on that most widespread of consumer electronic devices.

Similarly with the term “Computer Music.” In 2013 it denoted both experimental music using computers and popular and dance musics made with digital technology. If anything, the range of musics made using music technology has gotten even wider. I pointed out, humorously, that the British magazine called “Computer Music” was devoted to “how to” articles for those making digital dance music in their bedrooms, and not to articles dealing with the finer points of advanced synthesis. In the past few years, Future Music, the British publisher behind “Computer Music” acquired the American magazines “Keyboard,” and « Electronic Musician, » which occasionally covered topics of interest to the more “avant-garde” side of things, and these days, the magazines owned by “Future Music” not only have overlapping spheres of interest, but some articles that appear in one also appear in the other. The focus still remains on pop/dance music made with commercially available technology, but with the passing of time, some topics which were formerly considered obscure, such as granular synthesis, are now covered in their pages, albeit usually without acknowledging the people who pioneered those techniques.

As a means of showing how the term “computer music” had changed over the years, in my original essay I included a brief survey of things I had done over the years and how those activities fitted or did not fit, at the time, under the umbrella of “computer music.” My approach was humorous, and more than slightly ironic.

2. 1967-1975: SUNY Albany and UCSD

Here’s where we get into a bit of autobiography. I’ve watched the term change meanings ever since the 1960s. Let’s go into our Waybac machine, or Tardis, depending on which TV shows you watched as a kid. In 1967, I entered the State University of New York at Albany. They soon acquired a very large Moog system designed by Joel Chadabe,[3] which had a digital device in it, made by Bob Moog, which allowed various kinds of synchronizations and rhythmic triggerings of things. The university also had a computer centre, where people did projects involving piles of punchcards processed in batch mode. I wasn’t attracted to the computer courses but was immediately attracted to the Moog. On the other hand, two of my fellow students, Randy Cohen and Rich Gold, immediately began working at the computer centre, submitting their piles of punchcards, and waiting long periods for their results. I remember in particular, Randy wrote a program to produce experimental poetry. I was absolutely thrilled with his results, playing with sense and nonsense in a way that I thought was very clever. Randy, on the other hand, who was soon to embark on a career as a comedy writer, thought that the amount of labour required to get results that only a few weirdos like me would dig, was too great. So, for my earliest work, I felt there was a divide between “electronic musicians” and “computer artists,” and I was, for the moment at least, on the “electronic musician” side of things. In 1971, I went to the University of California, San Diego, and soon became involved with the Centre for Music Experiment (CME).[4] This was a facility which had both analogue and digital music labs, as well as projects involving dance, multimedia, video, and performance art. There was a huge computer[5] lovingly tended over by several of my friends. Ed Kobrin[6] was there at the time with his hybrid system, which had a small computer generating control voltages for analogue modules. I ran a small lab which had a Serge synthesizer,[7] a John Roy/Joel Chadabe designed system called Daisy (a very interesting random information generator), and some analogue modules designed by another of the fellows Bruce Rittenbach. As well, we could take control voltages from the output of the central computer. My own work still involved using “devices with knobs,” the world of “lines of code” was still opaque to me, although I did work on several projects where other people generated control signals with “lines of code” while I adjusted the “devices with knobs.” There was also a social divide I noticed. While I and my singer and cellist friends could hardly wait for the day to be over to head down to Black’s Beach,[8] our computer friends would be continuing to work, usually far into the night, on their code. There was a certain necessary obsessiveness involved with working with computers at that time which distinguished “real computer musicians” from the rest of “us.”

Of course, we acknowledge that the distinction is also faintly ridiculous, smacking as it does of those old silly arguments about “real men” or its non-sexist alternative, the “authentically self-realised person.”

My interests in making technology more accessible were already active at this time. From SUNY Albany friends Rich Gold and Randy Cohen, then doing post-graduate studies at California Institute of the Arts, I learned about Serge Tcherepnin and his “People’s Synthesizer Project.” The idea was to have a synthesizer kit for about $700 that people could assemble as a collective. Affordability, accessibility, and being part of a community were all very appealing things. And, the synthesizer was designed by experimental musicians for experimental musicians. There was a fair amount of what would become known as empowerment in the project as well. Simultaneous with that, for my Masters’ project, I began to design a box of electronics that became known as Aardvarks IV. Made of digital circuitry, with hand-made DACS, I described it as “a hard-wired model of a particular computer composing program.” My need for knobs – that is, for a device I could physically perform with- was still paramount. My approach to digital accuracy was a bit idiosyncratic. Uniqueness and funk were part of my aesthetic.

An illustration of what “funk” in electronic circuit design consisted of is seen in the design of the DACs on Aardvarks IV. Following Kenneth Gaburo’s[9] suggestion, I used very low quality resistors in the making of the Digital to Analog Converters.

more information on Aardvarks IV (Click again to hide)

At a time when DACs were viewed as utilitarian devices to be made as precise as possible, in the design of this box, I was trying to treat a utilitarian device as a source of variation, of creative unpredictability. This interest in creative unpredictability probably distinguished me from the rest of the guys in the back room at CME. That and the fact that I would rather be at Black’s Beach than in the back room.

Computers at the time were very hungry beasts, devouring resources around them. Now that they’ve totally taken over, they can afford to be more benign, but in those early days, it was a survival of the fittest situation. For example, when I was at UCSD, CME had projects in many different fields. By the time my wife, Catherine Schieve,[10] arrived there in the early 1980s, the multidisciplinary CME was well on the way to becoming exclusively a computer arts centre, and she too, remembers a social divide between the computer people and the rest of the musicians. What also distinguished the “computer guys” from others was the amount of their output. It was still the norm for a computer person to work for months to produce one short piece. For those of us who wanted a lot of output, fast, working exclusively with computers still wasn’t the way to go. Eventually, the organization evolved into CRCA, the Centre for Research into Computers and the Arts. In 2013, I looked at the CRCA website, and I saw that it now had a focus on multidisciplinary research, with some quite fascinating projects. However, visitors from UCSD to the 2013 conference told me that the CRCA had since been shut down. Another one bites the dust!

3. 1975-1981: Moving to Australia, Plastic Platypus

Somewhere between the 80s and today, “computer music” became a field that embraced the widest range of aesthetic positions. Today, just about the only common factor across the field is the use of electricity, and usually, a computer (or digital circuit) of some kind. But stylistically, we’re in a period of “anything goes.”

In the late 70s and early 80s, things changed. New, tiny computers began appearing and were applied to music making tasks. Several systems that promised much were designed,[11] which basically hid the computer behind a sort of musician-friendly interface. Simultaneously with that, a whole series of microcomputers, usually in build your own kit form, began to appear. There soon developed a divide in the computer music world between the “mainframe guys” – those who preferred to work on large expensive institutionally-based computers – and the “perform it in real-time folks” – those who preferred to work on small, portable microprocessor-based systems that they could afford to own themselves. Georgina Born’s Rationalizing Culture,[12] her study on the sociology of IRCAM in the 1980s, featured a look at how George Lewis,[13] with his microcomputer-based work, fared in the mainframe-and-hierarchy-based world of IRCAM.

In 1975, I arrived in Australia. I set up an analogue synthesis and video synthesis studio at La Trobe University in Melbourne. Graham Hair,[14] installed a PDP-11 computer and began some computer work with that. Following on from my work on “Aardvarks IV” at UCSD, I began once again working with digital chips. Inspired by Stanley Lunetta’s[15] example, I made a box, “Aardvarks VII,” exclusively out of 4017 counter/divider chips and 4016 gate chips. This was the rawest kind of digital design. The chips were simply soldered into printed back-plane boards. That is, the plastic faceplate of the synthesizer had the circuit connections printed on the back, and the chips were directly soldered onto these printed connectors. No buffering, no nothing. Just the chips. It was mainly designed to work with just intonation frequencies, and it gave me many more modules to play with. All in real-time. The physical-performance, combinational-module based patching aesthetic was still the paradigm for me. So, by this time, 1978-79, I felt I was an electronic musician who was working with digital circuitry, but I still wasn’t that rarest of beasts, the “computer musician.”

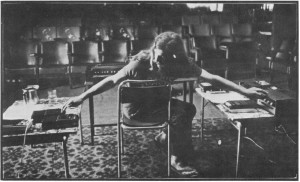

Simultaneously with this, I became involved with using the lowest of low technology – that is, the cheapest kind of consumer electronics, at the bottom of the economic scale – to make music. Ron Nagorcka[16] (who I had first met at UCSD) and I formed a group called Plastic Platypus, which made live electronic music with cassette recorders, toys, and electronic junk. Some of our setups were very sophisticated, the low-tech and low-fi nature of our tools concealing some very complex systems thinking, but our work came out of an ideological questioning of the nature of high fidelity. While we were also happy to work in institutions which could afford good loudspeakers, etc. we were also aware that the cost of audiophile systems was prohibitive for many people. Since one of our chief reasons for the group was to make work on the most common types of equipment to show that electronic music could be accessible to many, we embraced the sonic quality of the cassette recorder, the tiny loudspeaker swung on a cord, the toy piano or xylophone. As Ron put it eloquently, “the very essence of electronic media is distortion.” Technology, of course, would overtake us in the long run, and availability of sound quality to “the masses” became a non-issue by the late 1980s, but our serious working with the problems and joys of low technology was fun while it lasted.

Ron and I (and various other people who were working with cassette technology at the time, such as Ernie Althoff[17] and Graeme Davis[18]) were in agreement as to the basic structure of our work with this technology. A many generational feedback process, such as shown in Alvin Lucier’s “I Am Sitting in a Room,”[19] formed the basis for much of our work. In this process, a sound is made on one machine, and simultaneously recorded on a second machine. The second machine is then rewound and the playback from that machine is recorded on the first machine. Over the course of several generations of this, thick textures of sound, surrounded by gradually accumulating acoustic feedback, result. In Ron’s “Atom Bomb,” for two performers and four cassette recorders, he added the idea of fast forwarding and rewinding cassettes in progress to create a random distribution in time of the source sounds as we recorded them. At the end of this section, the four cassettes were rewound and played from the four corners of the room, creating a four channel “tape piece” which the audience had seen assembled in front of them. In my piece “Hebraic Variations,” for viola, two cassette recorders and portable speaker on a very long cord, I played (or attempted to play) the melody of “Summertime” by George Gershwin (I’m a very inadequate viola player). While I was playing this melody as an endless loop, Ron was recording about 30 seconds of my playing on one cassette recorder (the “recorder”) and then moving that tape to a second machine (the “player”), starting another cassette recording in the first machine, and then swinging the loudspeaker of the second machine in a circle around his head for about a minute. This created doppler shifts and made a thicker texture out of my viola playing. After 5 or 6 generations of this, a very thick soundscape of glissandi, out of tune playing and many kinds of tone clusters was assembled. The inadequate technology, and my inadequate playing multiplied each other creating a thick microtonal sound world.

There was pretty much complete unanimity between Ron and myself in making Plastic Platypus repertoire. We mostly left the composing of pieces to each other, and then learned the other composer’s desires as best as we could. The level of trust, and agreement between us was very high. A couple of years ago, Ron found some cassettes of Plastic Platypus performances and dubbed them and sent them to me. A lot of our old favorites were there and were immediately recognizable. But occasionally there was a piece that bewildered both of us – we couldn’t figure out who had composed the piece, or in what circumstances it was recorded. Maybe these pieces were improvisations where our authorship was obscured by the processes we used.

Ron Nagorcka at Clifton Hill Community Music Centre, 1978

In addition to my work with analogue synthesizers, with making my own digital circuits and with my work with low-tech, my own involvement with computers now began in earnest.[20] On trips back to the US, Joel Chadabe kindly lent me his studio, and for the first time, I actually used code to determine musical events. The results occurred in almost real time, so the “knob twiddler” in me was satisfied. Later, back in Australia, in 1979, I worked with the Synclavier at Adelaide University, with an invitation from Tristram Cary,[21] and in 1980 in Melbourne, I asked for access to the Fairlight CMI at the Victorian College of the Arts and learned the ins and outs of that machine. I’d contracted the virus of owning my own computer system.[22] A Rockwell AIM-65 microcomputer was my choice. So, I immersed myself in learning this machine and built my own interface for it in an extremely idiosyncratic way. Then, when I expanded the AIM’s memory to 32k, I was hot. Real time sound synthesis (using waveforms derived from the code in memory) was now possible. Using the AIM-65 like this, and processing its output with the Serge, I guess I was finally a “computer-musician,” but I don’t know if I was a “real” one. That is, my approach was still idiosyncratic, and my impulses towards making the equipment more accessible to all, using myself as an example (something a Marxist might cringe at), still seemed, in my own mind at least, to distinguish me from my mythical straw-dog of the elitist, obsessed with perfection and repeatability, mainframe computer operator who still didn’t want to go to the beach.

4. 1981-85: Low-cost Single-board Microcomputer

My adventures in the low-cost single-board microcomputer world occupied me, on and off from about 1981-85.[23] The overarching title for the work I did with this system between 1982 and 1984 was Aardvarks IX. One of the movements was called “Three Part Inventions (1984).” This was a semi-improvised piece in which I used the typewriter keyboard of the AIM-65 as a musical keyboard. Programmed in FORTH, I could retune the keyboard to any microtonal scale at the touch of a button.[24] In this piece, I combined my “computer-musician” chops with my interest in democratised cheap technology, and an interest in non-public distributed forms of music distribution. Each morning (in June 1984, I believe it was), I would sit down and improvise a version of the piece, recording that morning’s improvisation to a high-quality cassette. I believe I made 12 unique versions of the piece like this. I also made one more version of the piece, recorded to a reel-to-reel recorder and kept that to use in the recorded version of the whole cycle. Each of the 12 unique versions of the piece were each mailed to a different friend as a gift. Of course, I didn’t keep a record of which 12 friends I sent the cassettes to. So, in this piece, I combined my interest in microtonal tuning systems, improvisation, real-time electronic processes, the use of cheap(er) technology (the AIM computer, and the cassette recorder), mail art, and distributed music networks in one piece. I wanted to have it all – serious high-tech research and proletarian publishing and distribution networks, being done with homemade electronic circuitry and hobbyist level computing. Not surprisingly, some of my friends who lived in the “high end” of the “computer music” world found a number of points of contention with my choices of instrument and performance and distribution in this piece.

A couple of issues seemed relevant then, and to a degree, still are. One is the question: “How much building from the ground up do you want to do?” I mean, the reason we were doing this building was because the equipment was expensive, and mostly confined to institutions. Today, we have a spectrum which ranges from closed apps which do only one thing well (hopefully) to projects where you basically design your own chips and their implementation. Although these are slightly more extreme examples, that was the spectrum of choice that was available to us back then, as well: home-brew versus off-the shelf, and to what degree?

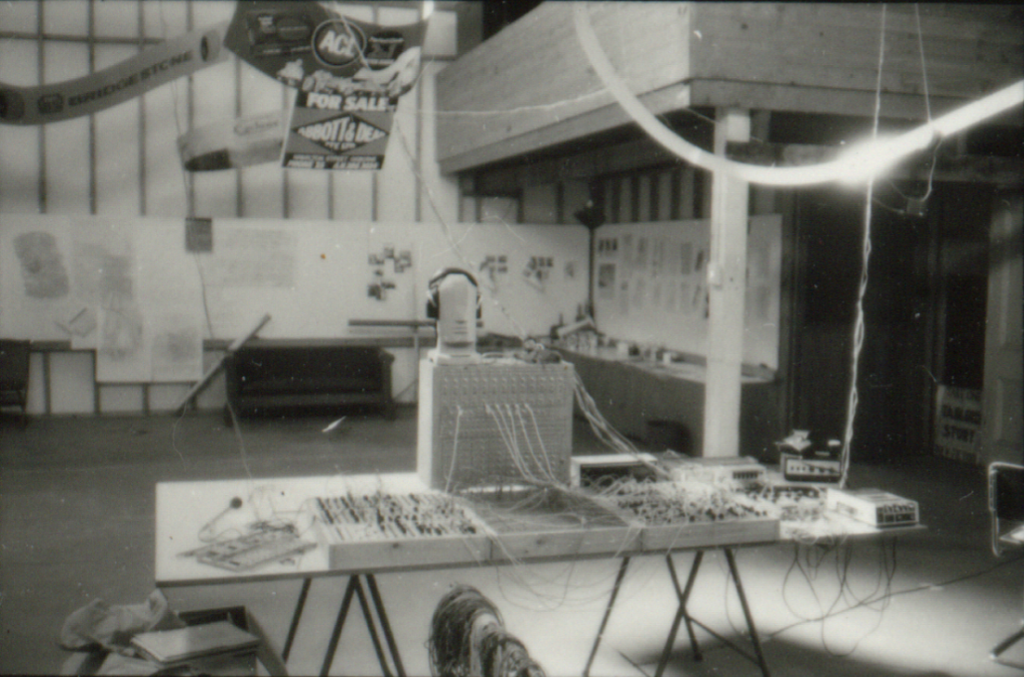

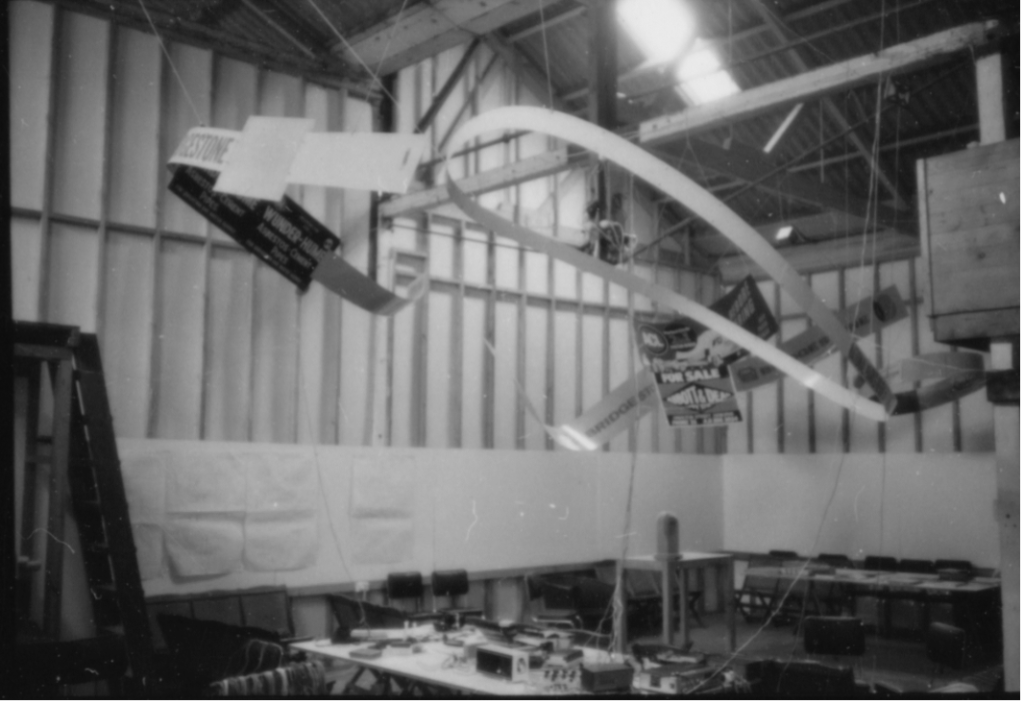

Another issue was that of ownership. Was one using someone else’s tools, whether that was an institution that you were associated with, or a friend’s gear that you used while visiting them; or were you using your own tools that you could develop an ongoing relationship with. At this point in my life, I was doing both. Although, encouraged by the example of Harry Partch,[25] who during my years at UCSD (1971-75) was still alive and living in San Diego, and directly encouraged by my teacher, Kenneth Gaburo, I made the decision that although I would work in institutional facilities if they were available, I would prefer to own my own equipment. Here are 2 pictures of Le Grand Ni, a 1978 installation at the Experimental Arts Foundation, Adelaide.

Warren Burt: Le Grand Ni, Experimental Art Foundation Adelaide, 1978. Aardvarks IV (Silver Upright Box),

Aardvarks VII (flat panel in front of Aardvarks IV),

transducers on metal advertising signs used as loudspeakers.

Photo by Warren Burt.

Le Grand Ni, 1978 – view of metal sign loudspeakers.

Photo by Warren Burt

Here’s a link to an excerpt from the 5th movement. This movement is played through regular loudspeakers, not the metal sculpture speakers:

Warren Burt, « Le Grand Ni », exerpt of 5th movement.

In more recent years, I’ve done very little work with multi-channel sound systems, because I haven’t been in a situation where either the space or the time to do so was available, although just recently, I received a commission from MESS, the Melbourne Electronic Sound Studio, to compose a piece for their 8-channel sound system. I did this in September-October 2022 and on October 8, 2022, at the SubStation, in Newport, Vic. I presented the new 8 channel work in concert. (Many thanks to MESS for this opportunity and for assistance in realising the piece.)

Another reason for owning one’s own was the – in my experience at least – tenuous nature of connections with institutions that I’ve experienced. For many of us, we’ve devoted several years of development to an institutional-based system, only to then lose our job at that institution. This situation in Australia is getting even worse. Most of the people I know in academia are now only on year-to-year contracts. Even the status of “ongoing staff,” a far cry from tenure, but at least something, seems to be less and less available. And as for Teaching Assistants, forget it – they don’t exist anymore. In 2012, as part of my employment, I had to do some research as to the state of music technology education in Australia. I found that nationwide, in the period from 1999-2012, 19 institutions had either terminated, or radically cut back, their music technology programs. This did not only occur in small institutions, but across the board in major institutions as well. For example, 4 of Australia’s leading computer music researchers, David Worrall, Greg Schiemer, Peter McIlwain, and Garth Paine all lost their positions at the institutions which they helped build over a series of many years. Note that we’re not talking about people leaving their jobs and another person replacing them, but the positions themselves being eliminated. Given a situation like that, my decades ago decision to “own my own” seems wiser than ever.

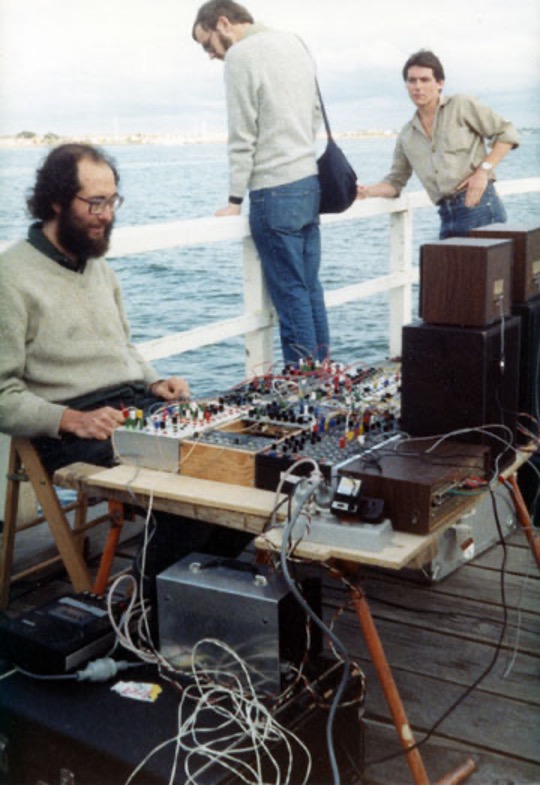

Here’s a picture which gives an example of the results of my “taking it to the people” in the early 1980s. Sounds made in the performance include: 1) Clicking of shrimp 2) Electronic pitches in reply to shrimp 3) Sizzzz of motorboats underwater sound 4) Waves 5) A water gong 6) Random pitch glissandi of oscillators responding to the changing amplitude of the hydrophone output 7) Debussy’s « La Mer » played under water and processed by waves 8) General public sounds 9) me talking to the public 10) seagulls. This all-day performance was given at the community-oriented St Kilda Festival,[26] on St Kilda Pier, in 1983.

Taking it to “the people”: Warren Burt: Natural Rhythm 1983. Hydrophone, water gongs,

Serge, Driscoll and home-brew modules, Gentle Electric Pitch to Voltage, Auratone Loudspeakers.

St. Kilda Festival, St. Kilda Pier, Melbourne.

Photo by Trevor Dunn.

In the mid-80s, I switched over to using commercial computers. I had left academia at the end of 1981, so as a freelancer, I needed a cheaper computer. The AIM-65 single-board micro, which I used from 1981-85, eventually proved not powerful enough, or reliable enough, for my needs. A series of PC-Dos based machines then followed. Along the way, I continued my interest in unusual composing and synthesis systems. US, developed at the Universities of Iowa and Illinois provided a lot of fun for a while. Arun Chandra’s Wigout[27] – his reconstruction of Herbert Brün’s “Sawdust”[28] also proved a rich resource. I enthusiastically watched my friends in England, motivated by the same “poverty and enthusiasm-for-accessibility ethic” that I had, develop the Composers’ Desktop Project, although I didn’t actually start working with the CDP for quite a while. I looked up older programs when they were available, such as Gottfried-Michael Koenig’s PR1,[29] which proved fruitful for a few pieces in the late 90s. And I found that I soon became involved with software developers and began beta-testing things for them. John Dunn (1943-2018) of Algorithmic Arts was one of my most constant co-workers for about 23 years, and I created a number of the tools available in his SoftStep, ArtWonk and MusicWonk programs.

5. 1985-2000: Increased Accessibility

William Burroughs has a very funny anecdote in one of his stories which involves the unfortunate traveller being invited by the Green Nun[30] to “see the wonderful work being done with my patients in the mental ward.” On entering the institution, her demeanour changes. “You must have permission to leave the room at any time.” Etc. And so the years passed. With that knowledge of time passing, we now come to the present, and what we see is a cornucopia of music making devices, programs etc all available at modest cost etc.

At a certain point in the 1980s, computers got smaller, and sprouted knobs and real time abilities, and stopped being the domain of a few people with mainframes and became the domain of just about anyone interested. With the requisite education, social status, etc, of course. And on that idea of the computer sprouting knobs, I like the interface design of the GRM [Groupe de Recherche Musicale] Tools in France. Following Pierre Schaeffer’s ideas, they insist that all controls can be externally controlled, there are many possibilities to move smoothly between different settings, and you don’t need to deal with a lot of numbers to use them.

At a certain point in (maybe) the late 90s, the number of oscillators available also became not an issue. The issue of accessibility was now focused on ways of controlling lots of oscillators. I remember Andy Hunt at York University worked on Midigrid, a system for disabled people to control electronic music systems with the limited mobility they had. Development largely stopped by 2003. Just this year, an English company, ADSR systems, has released a product called MidiGrid. From the YouTube video, I don’t think the two programs have anything to do with each other. And in the past couple of years, the AUMI team – Adaptive Use Musical Instruments[31] – have made marvellous strides in developing music control systems for tablet and desktop computers that make access to control even easier.

Also, at a certain point in the 1990s, access to sound quality (the economics of hi-fi) ceased to be an issue. That is, desire and convenience became more of an issue than economics. Prices on equipment have plummeted, more and more power is available for less money. New paradigms of interaction have occurred, such as the touch-screen, and other new performance devices, and just about anything one could want is now available fairly inexpensively. Faced with this abundance, one can be bewildered, or overwhelmed, or delighted and plunge into working with all the new tools, toys and paradigms that are here.

Here are some pictures that illustrate some of the changes that have occurred in the brief history of “computer music.”

John Cage, Lejaren Hiller and Illiac 2, University of Illinois, 1968 working on HPSCHD.

Backstage at a Trade Fair, Melbourne 2013.

Each of the Android tablet machines working off the laptop is more powerful than the Illiac 2,

and costs many times less.

Photo : Catherine Schieve.

Computer setup by Warren Burt for Catherine Schieve’s « Experience of Marfa. »

Astra Concerts, Melbourne June 1-2, 2013.

Two laptops and two netbooks controlled by Korg control boxes.

Photo: Warren Burt

Another view of the computer setup for Experience of Marfa.

Note the gong and custom made Sruti Box orchestra beyond the computers. Photo: Warren Burt.

This one’s for laughs.

This is a picture from 1954 of what the RAND Corporation thought

that the average home computer would look like in 2004.

6. Post-2000 Period: Commuter Train Work and Computer Brain Work

There is one resource, however, which was expensive way back then, and has become even more expensive now. That resource is time. Time to learn the new tools/toys, time to make pieces with the toys, and time to hear other people’s work and for others to listen to our work. In Australia, working conditions have deteriorated, and expenses have risen, so that now one works longer hours to have less resources. The days of working 3 days a week to get just enough money to get by, and still have a couple of days to work on one’s art, seem to be gone, at least for now. In our totally economics-dominated society, time to do non-economically oriented activities becomes a real luxury. Or, as Kyle Gann expressed it eloquently in his ArtsJournal.com Post Classic blog for August 24, 2013:

In short, we are all, every one of us, trying to discern what kind of music it might be satisfying, meaningful, and/or socially useful to make in a corporate-controlled oligarchy. The answers are myriad, the pros and cons of each still unproven. We maintain our idealism and do the best we can.

Another factor in the erosion of our time is the expansion of communications media. I don’t know about you, but unless I turn off the mobile phone and the email, there is very seldom a period of more than a half-hour where something is not calling for my urgent attention, be it in text, telephone, or email form. This state of constant interruption of the ever-decreasing amount of time one has to work is the situation many of us find ourselves in.

My own solution was to invest in a pair of noise-cancelling headphones, and small, but kind of powerful netbook computers, and after 2016, increasingly powerful tablet computers, such as the iPad Pro, so that I could work on Victoria’s excellent commuter trains. When one is surrounded by 400 other people, and the modem is switched off, and the headphones prevented one from hearing the mobile, then one could get at least an hour, each way, of uninterrupted compositional concentration time. Yet still, I wonder what has been the effect on my music when made in such a contained, tight, hermetic environment. I continue to compose in this manner and have written many pieces in this environment. In this piece, “A Bureaucrat Tells the Truth” from “Cellular Etudes (2012-2013)” I combine sophisticated samples and 8-bit crude sounds lovingly reconstructed in the Plogue softsynth, Chipsounds:

Warren Burt, « A Bureaucrat Tells the Truth »

I sent the piece to David Dunn and his observation was:

One of the formal issues that came up for me was the idea of sound samples for midi control as found objects (in the same way that any musical instrument is a found object) that carry with them definite cultural constructs (tradition). Most composers usually want a piece to be located within one of these (bourges vs. Stanford vs. cage vs. western orchestra vs. world musics vs. noise music vs. jazz vs. spectral music vs. rinky-dink lo fi diy, etc.). This usually plays out with composers trying to constrain their timbral choices so as to define a singular associative context (genre). In these pieces you let the divergent cultures rub noses until they bleed.” And it’s true. I want to have it both ways, or perhaps all ways. I see nothing wrong with being both high-tech and low-tech, with being both complex and elitist, AND proletarian.[32]

Perhaps my isolation-booth composing environment on the train makes me cram more and more cultures side by side, just as all of us on that train, from so many cultures, are jammed in there side-by-side.

So, the time issue is more of a problem than ever. One of the reasons for that is the accelerating amount of gear that is being released, which far outstrips my ability to seriously interrogate it. One of my strategies for composing involves looking at a piece of gear or software and asking, “What are the compositional potentials of this?” Not so much “what was it designed for,” but more “how can it be subverted?” Or if that seems too Romantic, maybe asking “What can I do with this that I haven’t done before?” And “What does the Deep Structure of this tool imply?” Remembering the early days of electronic music where people like Cage, Grainger and Schaeffer would use equipment clearly designed for other purposes in order to make their music, I find myself in a similar position today. The best new music equipment store I’ve found in Melbourne is StoreDJ, which has a good selection, good prices and a knowledgeable staff. Where Cage and friends appropriated their gear from science and the military, I now find I’m appropriating some of my resources from the dance-music industry. Most recently (2020-22) I’ve been involved with the VCV-Rack community. This is a group of programmers, led by Andrew Belt (see also Rack 2), who have been designing virtual modules which can be patched together, like analog hardware modules used to be (and still are), to make complex composing systems. I’ve contributed some of my circuit designs to Antonio Tuzzi’s NYSTHI project, which is a part of the larger VCV project. There are over 2500 modules now available, some of which are duplicates in software of earlier types of modules, and some of which are unique and original designs which point the way to exploring new compositional potentials. The distinction, raised above, between the dance-music industry and resources for the “new music” or “experimental music” scenes, has now largely disappeared. There are so many new resources out there, from all sorts of designers, with all sorts of aesthetic orientations, that one has an almost overwhelming variety of choices to select among.

A couple of years ago I quipped that there were far too many Japanese post-graduate audio engineering students with far too much time on their hands making far too many interesting free plugins for me to keep up with them all. Now of course, the situation is far worse, or is that better? The amount of resources available for free, or very cheaply, in the VCV Rack project, or in the iPad eco-system, is enough to keep me occupied for the next several lifetimes. And as long as I maintain an open-minded and exploratory attitude, they probably will.

Here are links to two videos, showing work from about 10 years ago. The first, “Launching Piece” uses 5 tablet computers.[33] At the time, I had just started working with this setup, and it was very nice to get out of the “behind the laptop” mode, and into a greater physical engagement while performing. I’m very involved in having what Harry Partch called “the spiritual, corporeal nature of man”[34] being an integral part of my music making. The second “Morning at Princes Pier” uses an iPad processed through a venerable Alesis AirFX to make a series of timbrally-fluid microtonal chords. And speaking of future shock, when I first bought the AirFX in 2000, I remember laughing at its advertising slogans – “The first musical instrument of the 21st century!” and “because now, everything else is just so 20th century!” In both these pieces, the new resources allowed me to finally get more physical, once again, in my performing.

Video

Warren Burt, “Launching Piece”

Video

Warren Burt,

“Morning at Princes Pier”

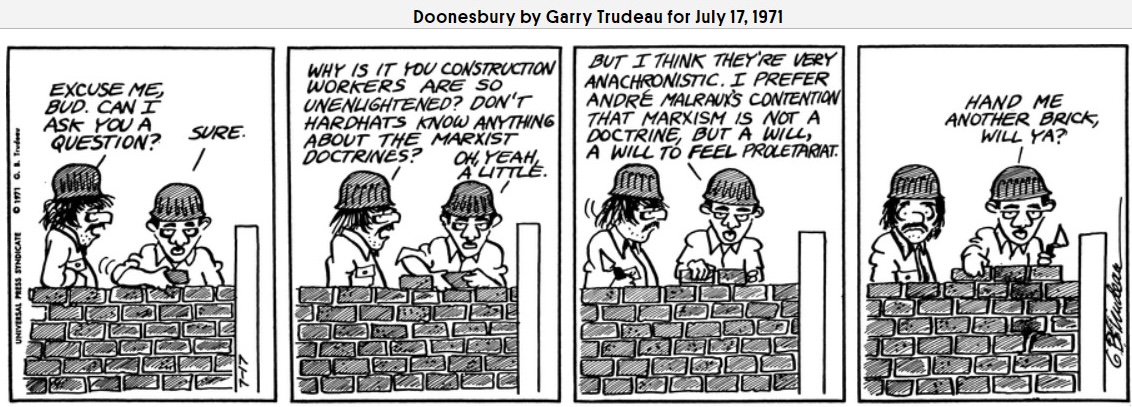

7. Today: Technological Music Utopia and Irrelevant Musicians

I have a friend who is an audiophile. He has a wonderful sound system that he spends a lot of time listening to. I proposed to him the idea that being an audiophile was an elitist activity, both in terms of the money his equipment cost, and in terms of the fact that he actually could afford the time to listen to things in that careful manner. I asked him if he could design an audiophile sound system that the working class could afford – that is, could he design a proletarian audiophile sound system. His reply was great: “For who? The people who spend $2000 on flat screen TVs?” I had to admit that he was right. The “working class” will spend a lot of money on equipment that will provide entertainment they want. And I decided that just as Andre Malraux had said that he thought that Marxism was a will to feel, to feel proletarian, so too being an audiophile was also a will to feel, a will to feel that high sound quality and the ability to put time aside to use that equipment, was worth it.

SO: We’ve reached a kind of technological music utopia, and we’re daily surrounded by ideas, gear that implies ideas, and gear that can realise ideas – all at prices that a poor person – or at least lower-middle class teacher even one who is going backwards economically – can afford. That’s lovely. What is not lovely is that we didn’t realise that when we reached the future, there would be so little place in it for us. For what hasn’t changed for us, since the 60s, is where we are – what our position is in relation to the larger world of music. As Ben Boretz[35] phrased it, so eloquently, we’re “the leading edge of a vanishing act.”[36] We’re a fringe activity to an economic juggernaut. And the juggernaut uses our findings, and usually doesn’t acknowledge them.

Continually, the words we use to describe ourselves have been taken up by different styles. I’ve seen “new music,” “experimental music,” “electronic music” “minimal music” and the list goes on and on, used by one pop genre or another over the past decades with no acknowledgement of where those terms came from. In fact, these days, when my students talk about “contemporary music” they don’t mean us. They mean the pop music they are currently interested in. We, and our work, have continually been “undefined” by industry, popular culture and the media.

“Soundbytes Magazine”[37] was a little web publication that I contributed to from about 2008 to 2021, with reviews of software or books. The editor, Dave Baer, was involved in computers since the 60s. He was a technician on the Illiac IV, then moved to the UCSD computer centre. He remembers attending the performance at Illinois of Cage and Hiller’s HPSCHD. He’s also a very good singer, appearing in the chorus of amateur opera productions. So, he’s not a stranger to our work. For a 2013 issue of Soundbytes, I suggested that he do an interview with me, since I was using computers in what I thought were some pretty interesting ways. His reply floored me – he’d be happy to do that, but we’d probably have to do a substantial introduction to place my work in context, since what I was doing was so far removed from the interests of the mainstream computer music maker! By this he didn’t mean, for example, that my note-oriented microtonal work was far removed from, let’s say, spectromorphological acousmatic work. No, he meant that my work, and all the other things we do, was far removed from the amateur dance music composer in their bedroom. So, in his view of popular consciousness in 2013, even the term we used to describe ourselves – “computer musician” – didn’t apply to us anymore. Once again, popular consciousness had robbed us of our identity. Maybe that’s the price you pay for being on the bleeding edge.

Of course, when you make a tool available to “everyone,” it’s more than likely that they’ll use it to make something they want to make, and not necessarily what you envisioned the tool would be used for. This has been around for a long time. I can tell a funny story on myself in this regard. In the early 70s in San Diego, I was a member of a group called “Fatty Acid” which played the popular classics badly. (The group was led by cellist and musicologist Ronald Al Robboy. The other regular member of the group was composer, writer, and performer David Dunn.) Sort of a conceptual art musicological comedy schtick, with serious Stravinskyan neo-classical overtones – or maybe serious Stravinskyan spectromorphological pretentions. I can’t even begin to describe the profound impact being in Fatty Acid had on my composing and performing outlook. Then, in 1980 I encountered the Fairlight CMI. This was heaven. Now I could make my “incompetence music” by myself, without having to return to San Diego from Melbourne to play with my buddies. I was completely thrilled by this. When I met Peter Vogel and Kim Ryrie, the developers of the Fairlight, I couldn’t wait to play them my “bad amateur blues ensemble” music. They were, quite naturally, less than impressed. What I thought was a natural, and exciting use of their machine, was for them, just plain weird. Stevie Wonder I was not. I remember Alvin Curran way back when, telling me that I had to be careful who I played some of my more off-the-wall stuff to. Alvin, you were right. They were not, to my chagrin, my ideal target audience.

So, the pressure on us weirdos to conform is still there, and still intense. Let’s go back in time and listen to Mao Zedong, in 1942, at the Yan’an Forum on Literature and Art. The language here is doctrinaire Marxism, but substituting terms will make it seem extremely contemporary, despite its origins in another time and in a vastly different ideological world:

The first problem is: literature and art for whom? This problem was solved long ago by Marxists, especially by Lenin. As far back as 1905 Lenin pointed out emphatically that our literature and art should “serve…..the millions and tens of millions of working people”….. Having settled the problem of whom to serve, we come to the next problem, how to serve. To put it in the words of some of our comrades: should we devote ourselves to raising standards, or should we devote ourselves to popularization? In the past, some comrades, to a certain or even serious extent, belittled and neglected popularization…We must popularize only what is needed and can be readily accepted by the workers, peasants and soldiers themselves.[38]

Substitute “target audience” for “the workers, peasants and soldiers” and “making a saleable product” for “popularization” and it becomes pretty clear that whether the system is capitalist or communist, they want us all to dance to their tune.

In the 1970s, Cornelius Cardew, from his Marxist-Leninist perspective, exhorted us to “shuffle our feet over to the side of the people, and provide music which serves their struggle.”[39]

Today, the dance-music scene exhorts us to (Melbourne) shuffle our feet over to the side of the people and provide music which serves their struggle to groove.

Today, the film-music industry exhorts us to shuffle our feet over to the side of the industry and provide music which serves their narratives.

Today, the game-music industry exhorts us to shuffle our feet over to the side of the industry and provide music and algorithms which serves as a target for their target audience.

Well, maybe we don’t want to shuffle. Maybe we want to remain what Kenneth Gaburo called “Irrelevant Musicians.”[40] Maybe we want to be arrogant enough to make a music which demands its own supply and supplies its own demands. I’m not sure I agree with Gaburo when he said, “If the world at large will one day awaken, it will need something to awaken to.” I think the world at large will probably one day provide the things it needs for its own awakening. But I understand where Gaburo is coming from. For in opposition to all the commodity-oriented thinking, some of us think of music as a gift, not a sales price-point. In recent years, Bandcamp has seemed to be a place where people can create a community which is interested first in music as a means of aesthetic or informational exchange, and only secondarily as a market item.

8. Conclusion

So, we were an opposition years ago, and we’re still an opposition now. Somewhere in the past few months I read a statement which appalled me. It was something like, “Every deeply held aesthetic position now becomes a just another preset in the composing arsenal.” While I had, in the past, reflected a bit ironically on the fact that, for example, FM and the Karplus-Strong algorithm, things which hard working people had devoted a substantial part of their lives to, were now just timbral options in a softsynth, or options in a software synthesis module, I had sort of expected that new technological ideas would be absorbed into the larger vocabulary of contemporary techniques. But this statement was implying that compositional ideas were now just so many recyclable resources, more grist for the great post-modern (or alter-modern to quote British critics) sausage machine. True enough maybe, but disturbing, nonetheless.

Have we really arrived at a situation where our ubiquitous tools are democratized then? Or have only a limited amount of resources, those which won’t rock the 4/4 boat, been offered by “the industry” to us. I think the answer is both. The resources are there for people to use. It’s up to us to keep reminding people of what other potentials there are to be explored, and how the new technological utopia can provide them with the means for exploration and even self-transformation. To do that, we (that’s you and me, brother) probably have to struggle against the media which wants to disavow our inconvenient existences, but that struggle is worth it, in that we will be one of several groups of people who will keep alternative and transformational modes of thought alive, and available to those with curiosity and the desire to explore.

What’s left to us? What’s left is work. Work which expands consciousness; work which provides the opportunity for changes in perception; work which attempts to bring about changes in society or provides a model for the kind of society we want to live in; work which re-affirms our identity as a unique and valuable part of our society. Work which we need to get back to, in an uninterrupted manner. As the Teen Age Mutant Ninja Turtles said, or was it Maxwell Smart, or Arnold Schoenberg? – “It’s a dirty job, but someone has to do it.”

Warren Burt, Nightshade Etudes 2012-2013 #19 – Tomato Muted Steinway

Microtonal scale based on Erv Wilson’s “Moment of Symmetry” work

Timbre – muted piano from Pianoteq Physical Modeling synth

DNA protein patterns from NIH gene data bank

DNA composing software – ArtWonk by Algorithmic Arts

Composing studio: V/Line regional commuter trains, Victoria

Protein patterns from tomato DNA are applied to pitch, dynamics, rhythm and played as a polyrhythmic canon on a virtual Steinway piano with mutes.)

1. Cat Hope, composer, flute and bass performer “who creates music that is conceptually driven, in animated formats for acoustic / electronic combinations as well as improvisations.” Cat Hope

2. Susan Frykberg (1954-2023) was a composer (New Zealand) who lived in Canada from 1979 to 1998. See wikipedia, Susan Frykberg

3. Joel Chadabe (1938-2021), composer (United States), “author, and internationally recognized pioneer in the development of interactive music systems.” wikipedia, Joel Chadabe

4. The Center for Music Experiment was between 1972 and 1983 a research center attached to the music department of the University of California San Diego.

5. A PDP-11 computer. See wikipedia PDP-11.

6. Ed Kobrin, a pioneer of electronic music (United States). He created a very sophisticated hybrid system: Hybrid 1-V. openlibrary Ed Kobrin.

7. The Serge synthesizers were created by Serge Tcherepnin, a composer and electronic music instruments builder: wikipedia Serge Tcherepnine. See also: radiofrance: Archéologie du synthétiseur Serge Modular

8. Famous nudist beach near UCSD. See wikipedia

9. Kenneth Gaburo (1926-1993), composer (United States). At the time mentioned in this article, he was professor at the Music Department at UCSD. See wikipedia Kenneth Gaburo

10. Catherine Schieve is an intermedia artist, composer, and writer – and lecturer in Performance Studies. She lives in Ararat, in central Victoria (Australia). See astramusic.org; and rainerlinz.net

11. For example, the New England Digital Synthesizer (not yet evolved into the Synclavier) wikipedia, and the Quasar M-8 (not yet evolved into the Fairlight CMI) artsandculture

12. Georgina Born, Rationalizing Culture, IRCAM, Boulez and the Institutionalization of the Musical Avant-Garde, Berkley – Los Angeles – London : University of California Press, 1995.

13. George Lewis, composer, performer, and scholar of experimental music, professor at Columbia University, New York. wikipedia George Lewis

14. Graham Hair, composer and scholar (Australia). See wikipedia Graham Hair

15. Stanley Lunetta (1937-2016), percussionist, composer, and sculptor (California).

16. Ron Nagorcka, composer, didgeridoo and keyboards player (Australia). See wikipedia Ron Nagorcka

17. Ernie Althoff, musician, composer, instrument builder and visual artist (Australia). See wikipedia Ernie Althoff

18. Graeme Davis, musician, and performance artist. daao.org.au Graeme Davis

19. Alvin Lucier (1931-2021), composer (United States). See wikipedia Alvin Lucier and for I am sitting in a room: youtube

20. Joel Chadabe had begun to work with the New England Digital Synthesizer, and with Roger Meyers had developed a program called Play2D to control it.

21. Tristam Cary ‘1925-2008), composer, pioneer of electronic and concrete music in England and then in Australia. See wikipedia Tristam Cary

22. George Lewis in New York showed me his work with the Rockwell AIM-65, and he mentioned to me the language FORTH. A little later, Serge Tcherepnin gave me a chip that made FORTH run on the AIM. This plunged me into serious computer programming for what might be the first time.

23. My Rockwell AIM-65 computer had three clocks on it, all of which counted down from a common source. You could feed numbers into each clock, and it would play subharmonics (divide-downs) of the master clock, which was working at about 1 MHZ. This system could easily be interfaced with my Serge synthesizer.

24. The three clock/oscillators of the AIM were then processed through the analogue circuitry of the Serge.

25. Harry Partch (1901-1974), composer and instrument builder (United States). See wikipedia Harry Partch

26. St Kilda is a Melbourne suburb (Australia).

27. Arun Chandra, composer et conductor. See evergreen.edu Arun Chandra

28. Herbert Brün: wikipedia Herbert Brün and « Sawdust »: evergreen.edu.au Sawdust

29. Gottfried Michael Koenig (1926-2021), German-Dutch composer. See wikipedia Gottfried Michael Koenig

30. William Burroughs, The Green Nun, from The Wild Boys: youtube The Green Nun

31. The AUMI system is designed to be used by anyone at any level of ability – depending on how its programmed, the user can perform it at any level of physical ability. See AUMI

32. David Dunn, email to Warren Burt, late 2014.

33. Two Android based tablets, two iOS based, and a Windows 8 tablet in Desktop mode.

34. Harry Partch: “The Spiritual Corporeal nature of man” from “Harry Partch in Prologue” on bonus record for “Delusion of the Fury”, Columbia Masterworks – M2 30576 · 3 x Vinyl, LP. Box Set · US · 1971.

35. Ben Boretz, composer and music theorist (United States). See wikipedia Ben Boretz

36. Ben Boretz, If I am a Musical Thinker, Station Hill Press, 2010.

37. Soundbytes Magazine and Dave Baer (editor): Since this article was written, and revised, all references to Soundbytes magazine have disappeared from the web. I hope to release a compilation of reviews I wrote for it on my www.warrenburt.com webside sometime in late 2024.

38. Mao Zedong : Interventions sur l’art et la littérature. Mai 1942. materialisme-dialectique